Raw Nbconvert Jupyter

$ jupyter nbconvert -to where is the desired output format and is the filename of the Jupyter notebook. Example: Convert a notebook to HTML. Convert Jupyter notebook file, mynotebook.ipynb, to HTML using: $ jupyter nbconvert -to html mynotebook.ipynb This command creates an.

- Raw Nbconvert Jupiter Fl

- Raw Nbconvert Jupiter Gardens

- Jupyter Cell Raw Nbconvert

- Raw Nbconvert Jupyter Notebook

- Raw Nbconvert Jupyter Notebook

- From the command line, use nbconvert to convert a Jupyter notebook (input) to a a different format (output). The basic command structure is: $ jupyter nbconvert -to notebook where is the desired output format and notebook is the filename of the Jupyter notebook.

- Nbconvert configuration for converting a Jupyter notebook into an HTML output with images saved separately, intended to be embedded into a Jekyll site.

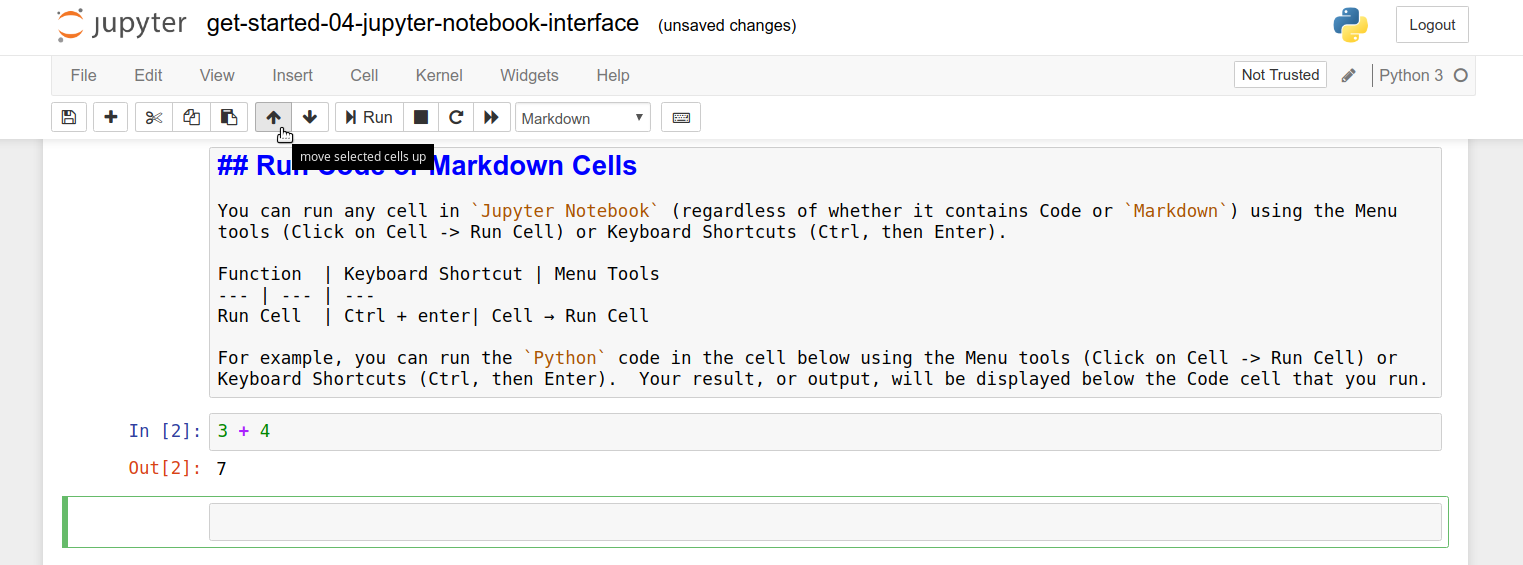

- Cell type options include Code, Markdown, Raw NBConvert (for text to remain unmodified by nbconvert), and Heading. You can change the cell type of any cell in Jupyter Notebook using the Toolbar. The default cell type is Code. To use the Keyboard Shortcuts, hit the esc key.

In this tutorial, you learn how to convert Juptyer notebooks into Python scripts to make it testing and automation friendly using the MLOpsPython code template and Azure Machine Learning. Typically, this process is used to take experimentation / training code from a Juptyer notebook and convert it into Python scripts. Those scripts can then be used testing and CI/CD automation in your production environment.

A machine learning project requires experimentation where hypotheses are tested with agile tools like Jupyter Notebook using real datasets. Once the model is ready for production, the model code should be placed in a production code repository. In some cases, the model code must be converted to Python scripts to be placed in the production code repository. This tutorial covers a recommended approach on how to export experimentation code to Python scripts.

In this tutorial, you learn how to:

- Clean nonessential code

- Refactor Jupyter Notebook code into functions

- Create Python scripts for related tasks

- Create unit tests

Prerequisites

- Generate the MLOpsPython templateand use the

experimentation/Diabetes Ridge Regression Training.ipynbandexperimentation/Diabetes Ridge Regression Scoring.ipynbnotebooks. These notebooks are used as an example of converting from experimentation to production. You can find these notebooks at https://github.com/microsoft/MLOpsPython/tree/master/experimentation. - Install

nbconvert. Follow only the installation instructions under section Installing nbconvert on the Installation page.

Remove all nonessential code

Some code written during experimentation is only intended for exploratory purposes. Therefore, the first step to convert experimental code into production code is to remove this nonessential code. Removing nonessential code will also make the code more maintainable. In this section, you'll remove code from the experimentation/Diabetes Ridge Regression Training.ipynb notebook. The statements printing the shape of X and y and the cell calling features.describe are just for data exploration and can be removed. After removing nonessential code, experimentation/Diabetes Ridge Regression Training.ipynb should look like the following code without markdown:

Refactor code into functions

Second, the Jupyter code needs to be refactored into functions. Refactoring code into functions makes unit testing easier and makes the code more maintainable. In this section, you'll refactor:

- The Diabetes Ridge Regression Training notebook(

experimentation/Diabetes Ridge Regression Training.ipynb) - The Diabetes Ridge Regression Scoring notebook(

experimentation/Diabetes Ridge Regression Scoring.ipynb)

Refactor Diabetes Ridge Regression Training notebook into functions

In experimentation/Diabetes Ridge Regression Training.ipynb, complete the following steps:

Create a function called

split_datato split the data frame into test and train data. The function should take the dataframedfas a parameter, and return a dictionary containing the keystrainandtest.Move the code under the Split Data into Training and Validation Sets heading into the

split_datafunction and modify it to return thedataobject.Create a function called

train_model, which takes the parametersdataandargsand returns a trained model.Move the code under the heading Training Model on Training Set into the

train_modelfunction and modify it to return thereg_modelobject. Remove theargsdictionary, the values will come from theargsparameter.Create a function called

get_model_metrics, which takes parametersreg_modelanddata, and evaluates the model then returns a dictionary of metrics for the trained model.Move the code under the Validate Model on Validation Set heading into the

get_model_metricsfunction and modify it to return themetricsInternet explorer 11 microsoft edge. object.

The three functions should be as follows:

Still in experimentation/Diabetes Ridge Regression Training.ipynb, complete the following steps:

Create a new function called

main, which takes no parameters and returns nothing.Move the code under the 'Load Data' heading into the

mainfunction.Add invocations for the newly written functions into the

mainfunction:Move the code under the 'Save Model' heading into the

mainfunction.

The main function should look like the following code:

At this stage, there should be no code remaining in the notebook that isn't in a function, other than import statements in the first cell.

Add a statement that calls the main function.

After refactoring, experimentation/Diabetes Ridge Regression Training.ipynb should look like the following code without the markdown:

Refactor Diabetes Ridge Regression Scoring notebook into functions

In experimentation/Diabetes Ridge Regression Scoring.ipynb, complete the following steps:

- Create a new function called

init, which takes no parameters and return nothing. - Copy the code under the 'Load Model' heading into the

initfunction.

The init function should look like the following code:

Once the init function has been created, replace all the code under the heading 'Load Model' with a single call to init as follows:

Raw Nbconvert Jupiter Fl

In experimentation/Diabetes Ridge Regression Scoring.ipynb, complete the following steps:

Create a new function called

run, which takesraw_dataandrequest_headersas parameters and returns a dictionary of results as follows:Copy the code under the 'Prepare Data' and 'Score Data' headings into the

runfunction.The

runfunction should look like the following code (Remember to remove the statements that set the variablesraw_dataandrequest_headers, which will be used later when therunfunction is called): Download psp for mac.

Once the run function has been created, replace all the code under the 'Prepare Data' and 'Score Data' headings with the following code:

The previous code sets variables raw_data and request_header, calls the run function with raw_data and request_header, and prints the predictions.

After refactoring, experimentation/Diabetes Ridge Regression Scoring.ipynb should look like the following code without the markdown:

Combine related functions in Python files

Third, related functions need to be merged into Python files to better help code reuse. In this section, you'll be creating Python files for the following notebooks:

Raw Nbconvert Jupiter Gardens

- The Diabetes Ridge Regression Training notebook(

experimentation/Diabetes Ridge Regression Training.ipynb) - The Diabetes Ridge Regression Scoring notebook(

experimentation/Diabetes Ridge Regression Scoring.ipynb)

Create Python file for the Diabetes Ridge Regression Training notebook

Convert your notebook to an executable script by running the following statement in a command prompt, which uses the nbconvert package and the path of experimentation/Diabetes Ridge Regression Training.ipynb:

Once the notebook has been converted to train.py, remove any unwanted comments. Replace the call to main() at the end of the file with a conditional invocation like the following code:

Your train.py file should look like the following code:

train.py can now be invoked from a terminal by running python train.py.The functions from train.py can also be called from other files.

The train_aml.py file found in the diabetes_regression/training directory in the MLOpsPython repository calls the functions defined in train.py in the context of an Azure Machine Learning experiment run. The functions can also be called in unit tests, covered later in this guide.

Create Python file for the Diabetes Ridge Regression Scoring notebook

Covert your notebook to an executable script by running the following statement in a command prompt that which uses the nbconvert package and the path of experimentation/Diabetes Ridge Regression Scoring.ipynb:

Once the notebook has been converted to score.py, remove any unwanted comments. Your score.py file should look like the following code:

The model variable needs to be global so that it's visible throughout the script. Add the following statement at the beginning of the init function:

After adding the previous statement, the init function should look like the following code:

Create unit tests for each Python file

Fourth, create unit tests for your Python functions. Unit tests protect code against functional regressions and make it easier to maintain. In this section, you'll be creating unit tests for the functions in train.py.

train.py contains multiple functions, but we'll only create a single unit test for the train_model function using the Pytest framework in this tutorial. Pytest isn't the only Python unit testing framework, but it's one of the most commonly used. For more information, visit Pytest.

Jupyter Cell Raw Nbconvert

A unit test usually contains three main actions:

- Arrange object - creating and setting up necessary objects

- Act on an object

- Assert what is expected

The unit test will call train_model with some hard-coded data and arguments, and validate that train_model acted as expected by using the resulting trained model to make a prediction and comparing that prediction to an expected value.

Raw Nbconvert Jupyter Notebook

Next steps

Now that you understand how to convert from an experiment to production code, see the following links for more information and next steps:

Raw Nbconvert Jupyter Notebook

- MLOpsPython: Build a CI/CD pipeline to train, evaluate and deploy your own model using Azure Pipelines and Azure Machine Learning